Research

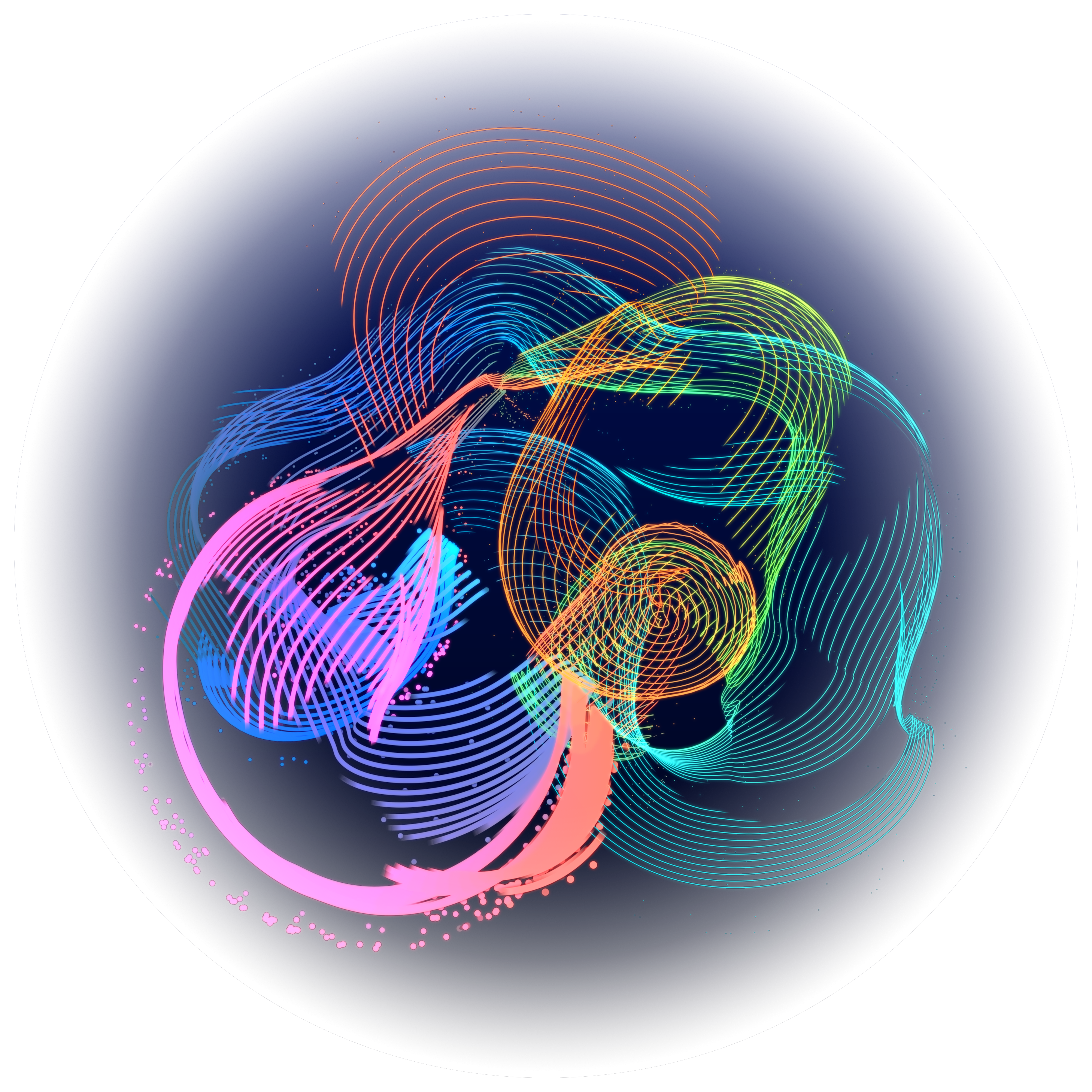

Emote VR Voicer, Image (c) by Adinda van ‘t Klooster, 2025

Projects

Emote VR Voicer is an AHRC Catalyst grant funded project. Led by Dr. Adinda van ’t Klooster, it is a further development from her two previous voice-controlled VR interfaces: VRoar and the AudioVirtualizer. Rather than just using live sound features to manipulate graphics, this interface also uses speech recognition and meaning classification to analyse the meaning of uttered words and their emotional intonation. Such AI facilities are already available in the public domain through code libraries but haven’t yet been integrated in artistic projects.

This project allows us to answer the following research questions:

- How can we develop a real-time VR app that understands the meaning of the spoken/sung word and maps semantically to aesthetically rewarding graphics?

- Which mappings and designs work best for an interactive VR app that aims to encourage people to play with their voice, and increase wellbeing?

The chosen graphics will be abstract so the aim is not to make an illustrative feedback system but rather to make an emotionally intelligent interactive system that will encourage people to discover and extend the limits of their voice.

Project partners are:

- Dr Adinda van ’t Klooster is the project lead and brain child of this project. As an independent artist over the past twenty+ years, she has used a variety of live interactive audiovisual technologies to create immersive interactive interfaces, including VR art games, light and sound installation, interactive audiovisual performance and interactive sculpture. At SODA/Manchester Metropolitan University she is a senior lecturer in interdisciplinary digital art and teaches across the different courses and levels, up to PhD supervision.

- Dr Robyn Dowlen is a Research Fellow at Edgehill University. She has an extensive background in the field of culture, health, and wellbeing. Robyn’s research centres on developing methods and approaches for capturing ‘in the moment ’ experiences in a dementia context, exploring how music and other creative activities can support meaningful moments of connection for people with dementia and those who support them. In this project she advises on approaches related to measuring wellbeing, and undertakes part of the evaluation.

- Dr Jason Hockman is Reader in Music and Sound at Manchester Metropolitan University (SODA) and advises through periodic, strategic consultations aimed at steering the team towards relevant AI techniques, ensuring the project remains current and on track with regards to technological and methodological advancements in the field.

- Prof. Carlo Harvey is a creative technologist whose interdisciplinary work blends games, computer graphics, machine learning, acoustics, virtual production and immersive media. For this project he is advising on animation and games processes and also mentors Adinda due to his previous experience with grant funded research projects.

Pippa Anderson, vocal coach and vocal rehabilitation specialist helped with the first phase of the project, which included bringing the AudioVirtualizer and VRoar VR interfaces to the North East of England and evaluating them on singers.

We are currently recruiting for two Research Associate posts to support this project.

This project is funded by an AHRC Catalyst Grant (AH/Z506618/1) and supported by Manchester Metropolitan University, Edge Hill University and the Manchester Games Centre.

Books

van't Klooster, A., 2021. 'Still Born, Second Edition', Affect Formations Publishing, Newcastle upon Tyne.

van 't Klooster, A., 2018. 'Still Born', Affect Formations Publishing, Newcastle upon Tyne.

Book Chapters

van't Klooster, A., 2017. 'Electronic sound art and aesthetic experience'. In The Cambridge Companion to Electronic Music, pp. 225-237.

Journal Articles

van't Klooster, A., Dowlen, R., 2025. 'Can voice responsive VR art experiences improve wellbeing?', Baltic Screen Media Review.

van't Klooster, A., Heazell, A.E.P., 2023. 'Each Egg a World online: giving a voice to bereaved parents and breaking the taboo on stillbirth', Brazilian Creative Industries Journal, 3 (2), pp. 94-106.

Klooster, A., Collins, N., 2021. 'Virtual Reality and Audiovisual Experience in the AudioVirtualizer', EAI Endorsed Transactions on Creative Technologies, 8 (27), pp. 169037-169037.

van 't Klooster, A., 2018. 'Creating Emotion-Sensitive Interactive Artworks: Three Case Studies', Leonardo, 51 (3), pp. 239-245.

van 't Klooster, A., Collins, N., 2017. 'An emotion-aware interactive concert system: a case study in realtime physiological sensor analysis', Journal of New Music Research, 46 (3), pp. 261-269.

Conference Papers

van't Klooster, A., Collins, N., 2024. 'Voice Responsive Virtual Reality', Utrecht, Netherlands, 4/9/2024 - 6/9/2024, in Proceedings of the International Conference on New Interfaces for Musical Expression, pp. 346-350.

van't Klooster, A., Collins, N., 2014. 'In a State: Live Emotion Detection and Visualisation for Music Performance', NIME2014 Conference, London, 30/6/2014 - 3/7/2014, in NIME2014 Conference Proceedings.

van't Klooster, A., 2012. 'Music as a mediator of emotion', in Icmc 2012 Non Cochlear Sound Proceedings of the International Computer Music Conference 2012, pp. 416-419.

van't Klooster, A., 2012. 'Sonifying animated graphical scores and visualising sound in the hearimprov performance', in Icmc 2012 Non Cochlear Sound Proceedings of the International Computer Music Conference 2012, pp. 173-179.

van't Klooster, A., 2012. 'The body as mediator of music in the Emotion Light', NIME2012, Michigan, USA, 21/5/2012 - 23/5/2012, in ICMC2012 Conference Proceedings.